Deploy & Inference Mistral 7B Instruct on SageMaker JumpStart

Learn how to Deploy & Inference Mistral 7B Instruct on SageMaker JumpStart on ml.g5.2xlarge instance.

Mistral-7B Instruct is a fine-tuned version of the Mistral-7B language model that has been specifically trained on instruction datasets. This fine-tuning has given the model the ability to generalize to new tasks and achieve remarkable performance on a variety of benchmarks.

In particular, Mistral-7B Instruct outperforms all other 7B models on MT-Bench, a benchmark that measures the ability of language models to follow instructions. It also competes favorably with 13B chat models, which are typically much larger and more complex.

This demonstrates the power of fine-tuning to improve the performance of language models on specific tasks. It also shows that Mistral-7B is a versatile and powerful language model that can be used for a wide range of applications.

In this tutorial we will deploy Mistral 7B Instruct model on Amazon SageMaker JumpStart and then perform basic inference.

Deploy Mistral 7B Instruct

To deploy Mistral 7B Instruct on Amazon SageMaker JumpStart, let's perform the following steps.

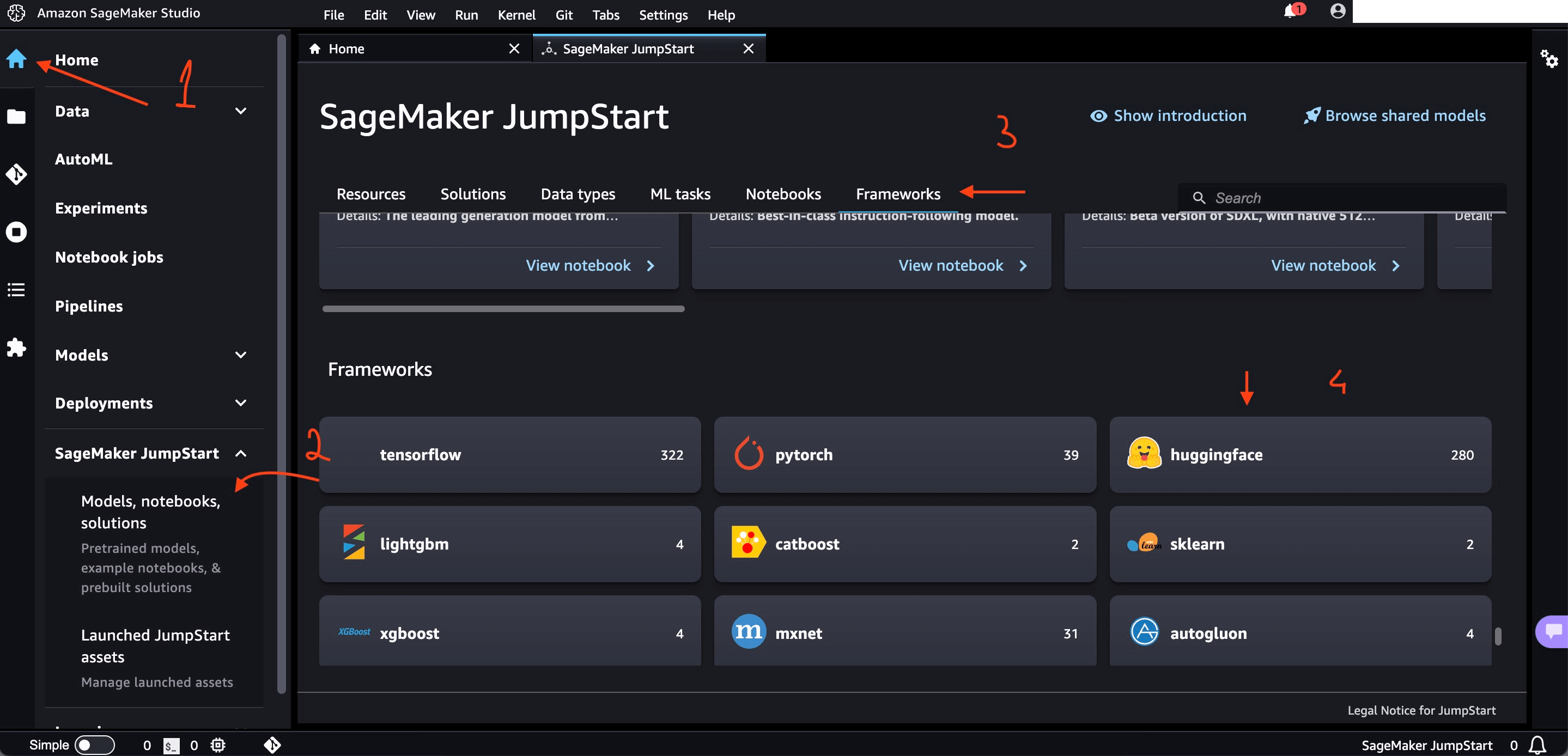

1. Launch Sagemaker JumpStart on Amazon SageMaker

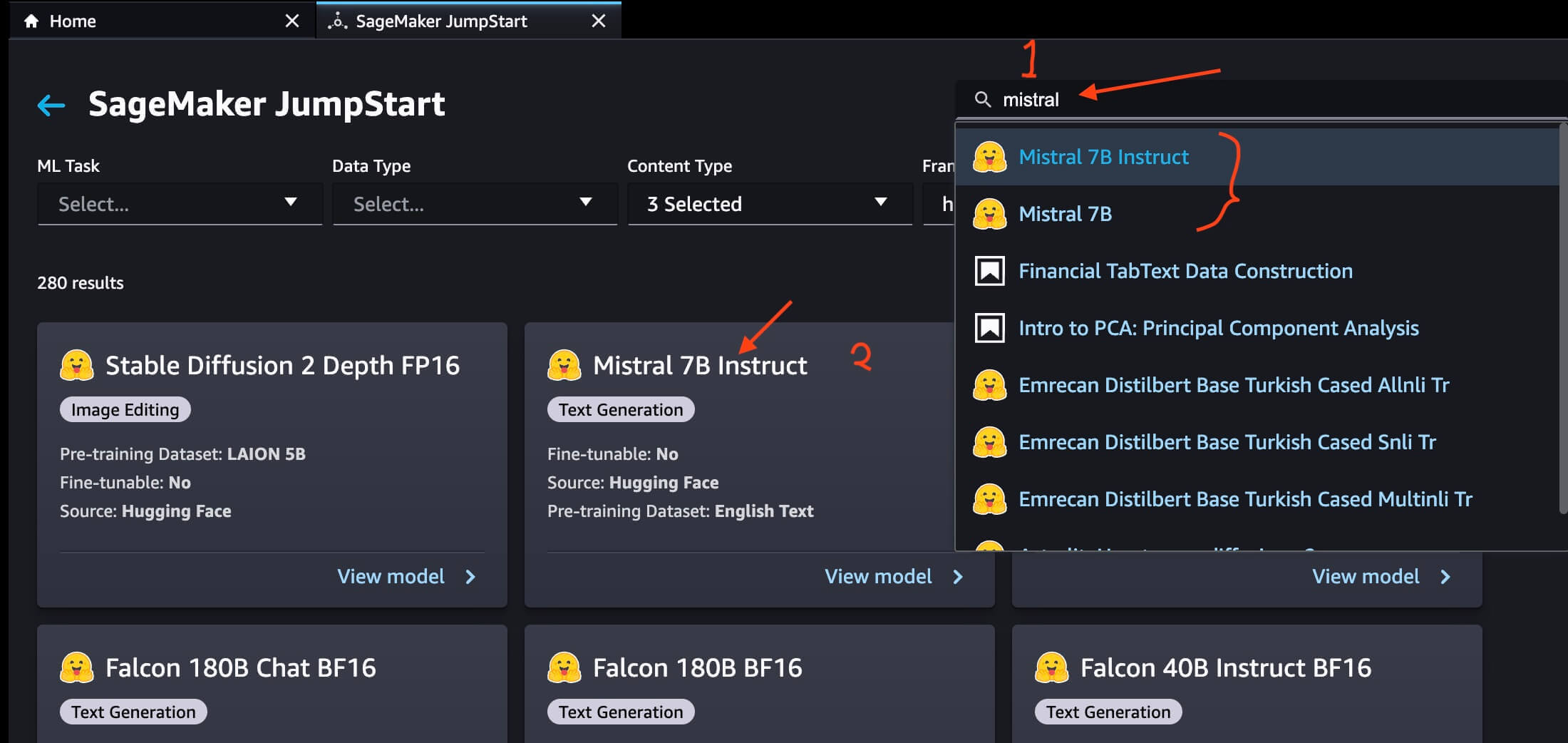

2. Mistral 7B Instruct on Amazon SageMaker JumpStart HuggingFace

Both Mistral 7B and Mistral 7B are available on SageMaker JumpStart and are not fine-tunable.

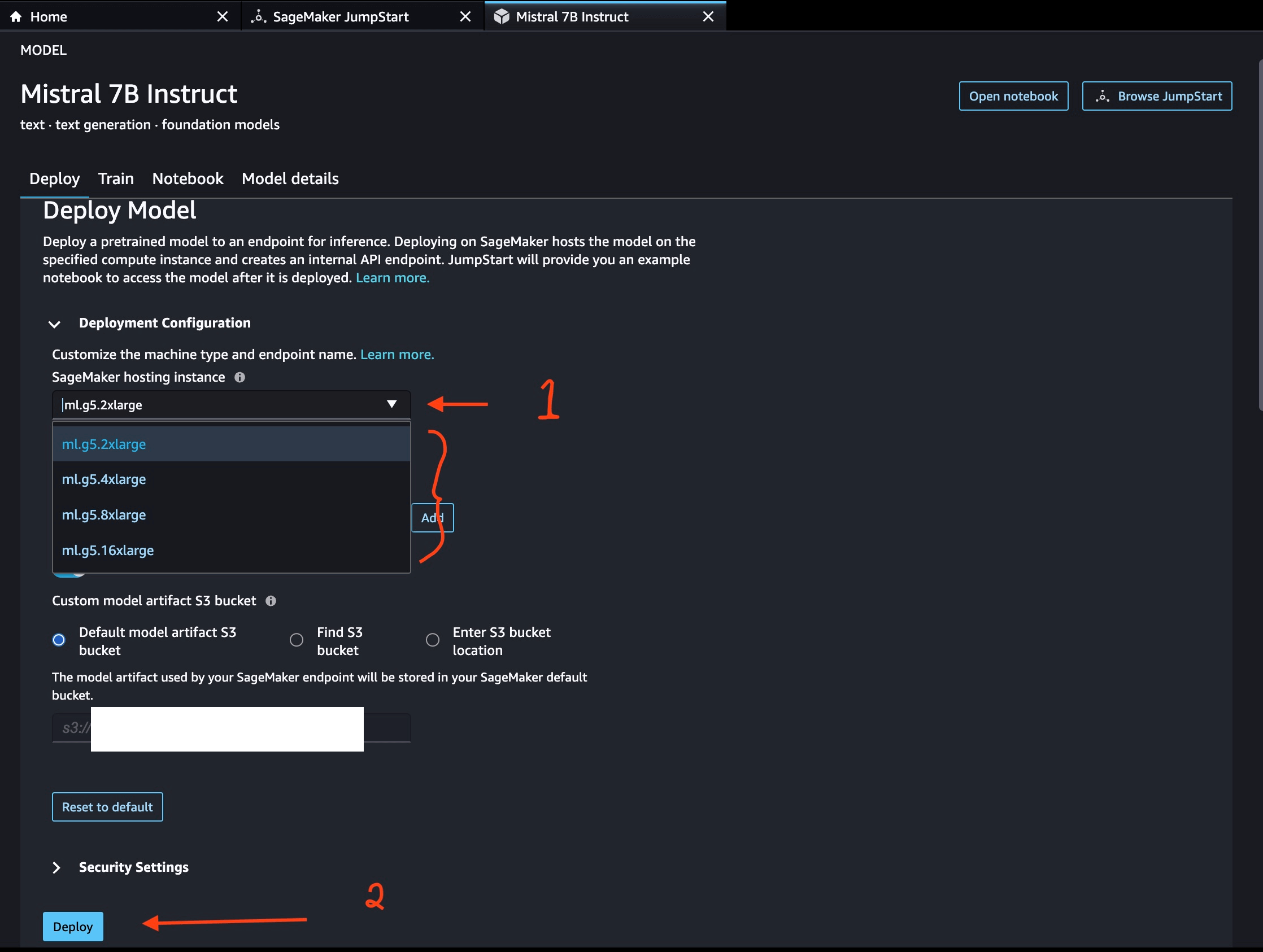

3. Deploy Mistral 7B Instruct on Amazon SageMaker JumpStart

You can choose ml.g5.2xlarge and above till ml.g5.16xlarge SageMaker hosting instance for deploying Mistral 7B Instruct LLM.

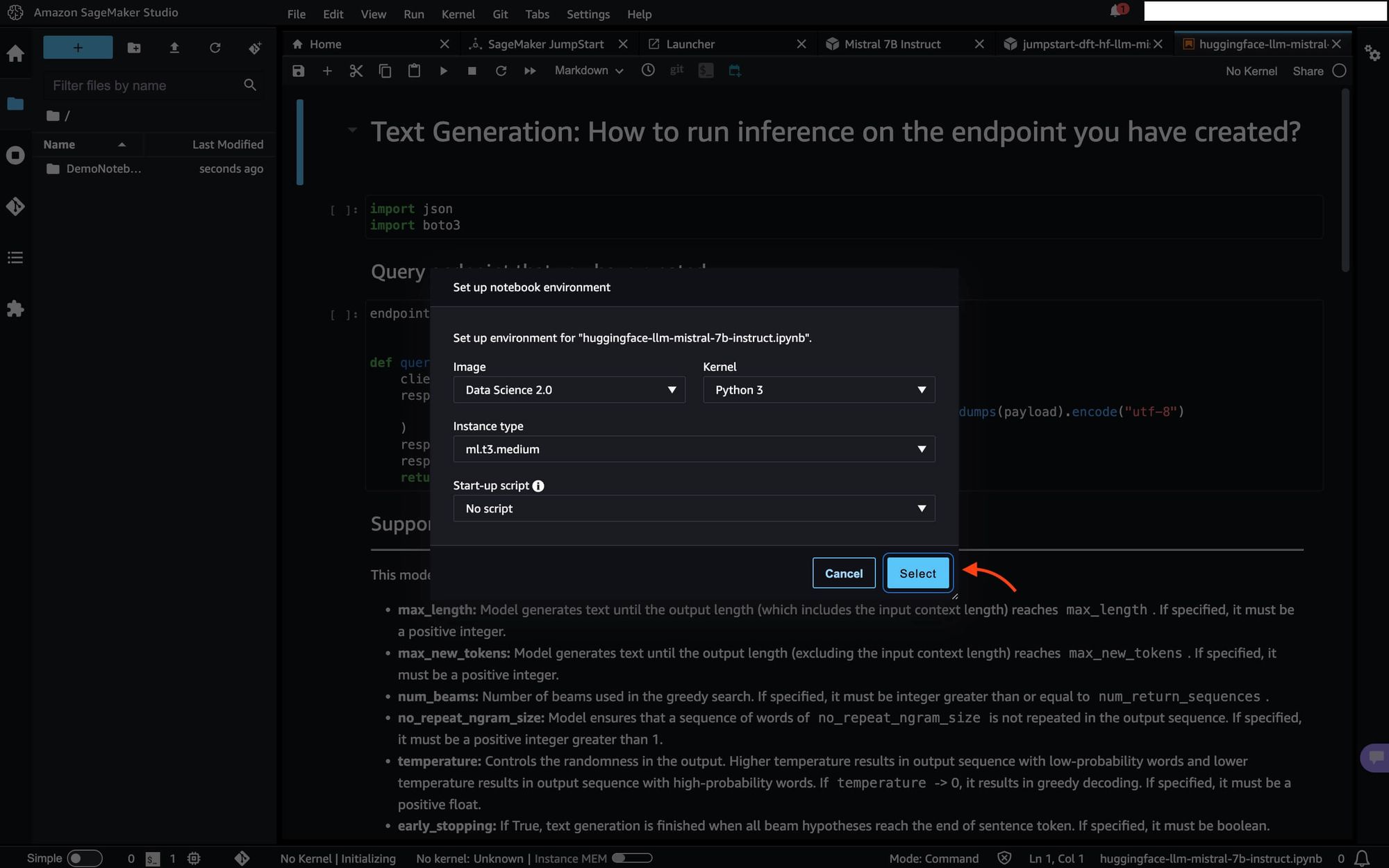

If you are planning to use SageMaker provided notebook to deploy Mistral 7B, you can choose to use DataScience 3.0 Python 3 Kernel. You must also want to ensure you have latest version of sagemaker python package by running the command !pip install -Uq 'sagemaker>=2.188.0' in the code cell of SageMaker notebook.4. Use Endpoint from Studio for Mistral 7B Instruct deployed via Amazon SageMaker JumpStart Hub

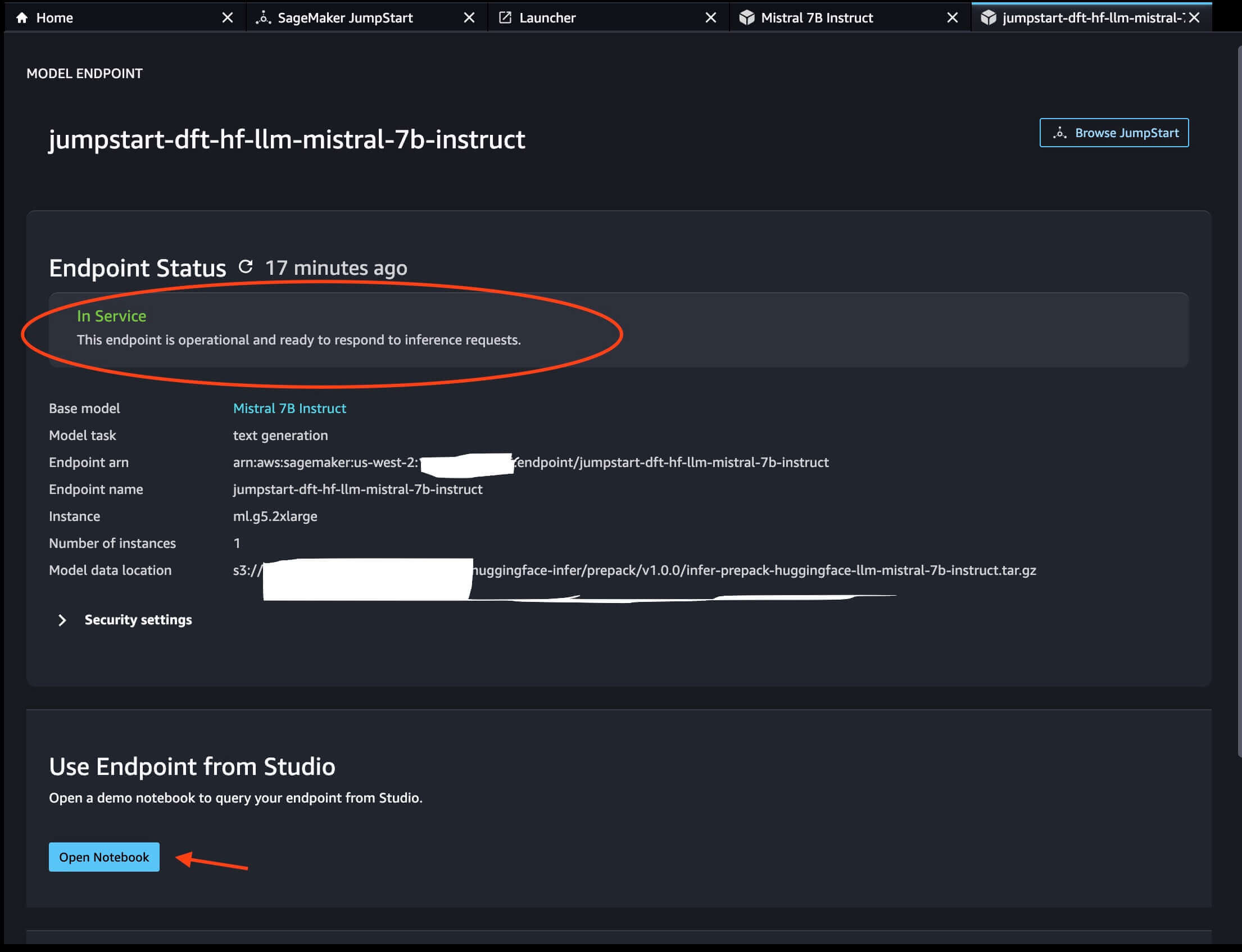

We have successfully deployed Mistral 7B Instruct on Amazon SageMaker JumpStart. Now let's use the SageMaker endpoint for inferencing the Mistral 7B Instruct.

Inference Mistral 7B Instruct

1. Setup Notebook Environment for SageMaker Endpoint Inferencing of Mistral 7B Instruct

We will see the notebook launched for us. Let's look at the inference code.

Import necessary libraries such as json and boto3.

import json

import boto3Query endpoint that you have created

endpoint_name = "jumpstart-dft-hf-llm-mistral-7b-instruct"

def query_endpoint(payload):

client = boto3.client("runtime.sagemaker")

response = client.invoke_endpoint(

EndpointName=endpoint_name, ContentType="application/json", Body=json.dumps(payload).encode("utf-8")

)

response = response["Body"].read().decode("utf8")

response = json.loads(response)

return responseSupported parameters

This model supports many parameters while performing inference. They include:

- max_length: Model generates text until the output length (which includes the input context length) reaches

max_length. If specified, it must be a positive integer. - max_new_tokens: Model generates text until the output length (excluding the input context length) reaches

max_new_tokens. If specified, it must be a positive integer. - num_beams: Number of beams used in the greedy search. If specified, it must be integer greater than or equal to

num_return_sequences. - no_repeat_ngram_size: Model ensures that a sequence of words of

no_repeat_ngram_sizeis not repeated in the output sequence. If specified, it must be a positive integer greater than 1. - temperature: Controls the randomness in the output. Higher temperature results in output sequence with low-probability words and lower temperature results in output sequence with high-probability words. If

temperature-> 0, it results in greedy decoding. If specified, it must be a positive float. - early_stopping: If True, text generation is finished when all beam hypotheses reach the end of sentence token. If specified, it must be boolean.

- do_sample: If True, sample the next word as per the likelihood. If specified, it must be boolean.

- top_k: In each step of text generation, sample from only the

top_kmost likely words. If specified, it must be a positive integer. - top_p: In each step of text generation, sample from the smallest possible set of words with cumulative probability

top_p. If specified, it must be a float between 0 and 1. - return_full_text: If True, input text will be part of the output generated text. If specified, it must be boolean. The default value for it is False.

- stop: If specified, it must a list of strings. Text generation stops if any one of the specified strings is generated.

We may specify any subset of the parameters mentioned above while invoking an endpoint. Next, we show an example of how to invoke endpoint with these arguments.

from typing import Dict, List

def format_instructions(instructions: List[Dict[str, str]]) -> List[str]:

"""Format instructions where conversation roles must alternate user/assistant/user/assistant/..."""

prompt: List[str] = []

for user, answer in zip(instructions[::2], instructions[1::2]):

prompt.extend(["<s>", "[INST] ", (user["content"]).strip(), " [/INST] ", (answer["content"]).strip(), "</s>"])

prompt.extend(["<s>", "[INST] ", (instructions[-1]["content"]).strip(), " [/INST] "])

return "".join(prompt)

def print_instructions(prompt: str, response: str) -> None:

bold, unbold = '\033[1m', '\033[0m'

print(f"{bold}> Input{unbold}\n{prompt}\n\n{bold}> Output{unbold}\n{response[0]['generated_text']}\n")instructions = [{"role": "user", "content": "what is the recipe of mayonnaise?"}]

prompt = format_instructions(instructions)

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 256, "do_sample": True}

}

response = query_endpoint(payload)

print_instructions(prompt, response)Output

> Input

<s>[INST] what is the recipe of mayonnaise? [/INST]

> Output

1. In a large mixing bowl, whisk together 3 egg yolks and 1 tablespoon of water until well combined. Allow the mixture to stand at room temperature for 30 minutes.

2. In a separate bowl, combine 1 tablespoon of lemon juice, 1 tablespoon of distilled white vinegar, and 1/2 cup of vegetable oil.

3. Slowly drizzle the lemon juice mixture into the egg yolk mixture, whisking constantly as you go.

4. Continue whisking until the mixture thickens and emulsifies, forming a smooth, creamy texture.

5. Taste and adjust the seasoning with salt and/or sugar as desired.

6. Serve immediately or store in an airtight container in the refrigerator for up to 7 days.

Keep in mind that it is possible to make a dairy-free version of this recipe by using coconut oil in place of the vegetable oil and/or soy-free mayonnaise in place of the egg yolk mixture.

Let's build a prompt with instructions in the format specified in the code block follows.

instructions = [

{"role": "user", "content": "I am going to Paris, what should I see?"},

{

"role": "assistant",

"content": """\

Paris, the capital of France, is known for its stunning architecture, art museums, historical landmarks, and romantic atmosphere. Here are some of the top attractions to see in Paris:

1. The Eiffel Tower: The iconic Eiffel Tower is one of the most recognizable landmarks in the world and offers breathtaking views of the city.

2. The Louvre Museum: The Louvre is one of the world's largest and most famous museums, housing an impressive collection of art and artifacts, including the Mona Lisa.

3. Notre-Dame Cathedral: This beautiful cathedral is one of the most famous landmarks in Paris and is known for its Gothic architecture and stunning stained glass windows.

These are just a few of the many attractions that Paris has to offer. With so much to see and do, it's no wonder that Paris is one of the most popular tourist destinations in the world.""",

},

{"role": "user", "content": "What is so great about #1?"},

]

prompt = format_instructions(instructions)

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 256, "do_sample": True}

}

response = query_endpoint(payload)

print_instructions(prompt, response)Output

> Input

<s>[INST] I am going to Paris, what should I see? [/INST] Paris, the capital of France, is known for its stunning architecture, art museums, historical landmarks, and romantic atmosphere. Here are some of the top attractions to see in Paris:

1. The Eiffel Tower: The iconic Eiffel Tower is one of the most recognizable landmarks in the world and offers breathtaking views of the city.

2. The Louvre Museum: The Louvre is one of the world's largest and most famous museums, housing an impressive collection of art and artifacts, including the Mona Lisa.

3. Notre-Dame Cathedral: This beautiful cathedral is one of the most famous landmarks in Paris and is known for its Gothic architecture and stunning stained glass windows.

These are just a few of the many attractions that Paris has to offer. With so much to see and do, it's no wonder that Paris is one of the most popular tourist destinations in the world.</s><s>[INST] What is so great about #1? [/INST]

> Output

1. The Eiffel Tower: The Eiffel Tower is a major icon of Paris and France, and is considered one of the most recognizable landmarks in the world. It was built in 1889 and stands at over 1,083 feet tall. The tower offers breathtaking views of the city from its observation deck, and is also a popular spot for photography. It is a beloved symbol of love and has served as a popular spot for weddings and romantic proposals. Some people say it is one of the most romantic destinations in the world.Let's build another prompt with instructions in the format specified in the code block follows.

instructions = [

{

"role": "user",

"content": "In Bash, how do I list all text files in the current directory (excluding subdirectories) that have been modified in the last month?",

}

]

prompt = format_instructions(instructions)

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 256, "do_sample": True, "temperature": 0.2}

}

response = query_endpoint(payload)

print_instructions(prompt, response)Output

> Input

<s>[INST] In Bash, how do I list all text files in the current directory (excluding subdirectories) that have been modified in the last month? [/INST]

> Output

1. Use the `find` command to search for text files in the current directory (excluding subdirectories) that have been modified in the last month. The `-type f` option specifies that only files should be searched, and the `-name "*.txt"` option specifies that only files with a `.txt` extension should be included. The `-mtime -30` option specifies that only files modified in the last 30 days should be included.

```bash

find . -type f -name "*.txt" -mtime -30

```

2. Use the `ls` command with the `-l` option to list the files in long format, which includes the modification time. The `-1` option specifies that only the file names should be listed.

```bash

ls -l -1

```

3. Combine the two commands using the `grep` command to filter the output to only include lines that contain the `-mtime -30` option.

```bash

find . -type f -name "*.txt" -mtime -30 | greLet's try one more prompt to see how the Mistral 7B instruct responds.

instructions = [

{

"role": "user",

"content": "What is the difference between inorder and preorder traversal? Give an example in Python.",

}

]

prompt = format_instructions(instructions)

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 256, "do_sample": True, "temperature": 0.2}

}

response = query_endpoint(payload)

print_instructions(prompt, response)Output

> Input

<s>[INST] What is the difference between inorder and preorder traversal? Give an example in Python. [/INST]

> Output

1. Inorder traversal: It is a way of traversing a tree or a graph where we first visit the left subtree, then the root, and finally the right subtree. This is done in a left-root-right order.

Example:

```python

def inorder_traversal(root):

if root:

inorder_traversal(root.left)

print(root.val)

inorder_traversal(root.right)

# Driver code

root = Node(5)

root.left = Node(3)

root.right = Node(7)

root.left.left = Node(1)

root.left.right = Node(4)

inorder_traversal(root)

```

Output:

```

1

3

5

4

7

```

2. Preorder traversal: It is a way of traversing a tree or a graph where we first visit the root, then the left subtree, and finally the right subtree. This is done in a root-left-right order.

Example:

``Summary

In this tutorial, we learnt how to Deploy & Inference Mistral 7B Instruct on SageMaker JumpStart on ml.g5.2xlarge instance.